SVRG

Published:

论文阅读笔记:Accelerating Stochastic Gradient Descent using Predictive Variance Reduction. NIPS 2013.

SVRG

利用方差缩减技术加速SGD的优化过程。

在SGD中,考虑如下的优化问题,最小化样本的经验风险, \(\begin{align} \min P(w ) = E \phi(w) = \min \sum_{i=1}^N \phi_i(w) \end{align}\) 我们假设函数具有较好的性质, \(\begin{align} \phi_i(w) - \phi_i(w') - \nabla \phi_i(w') (w-w') &\le \frac{1}{2} L \Vert w-w' \Vert^2 \\ P(w) - P(w') - \nabla P(w') (w-w') &\ge \frac{1}{2} \mu \Vert w-w' \Vert^2 \\ \end{align}\)

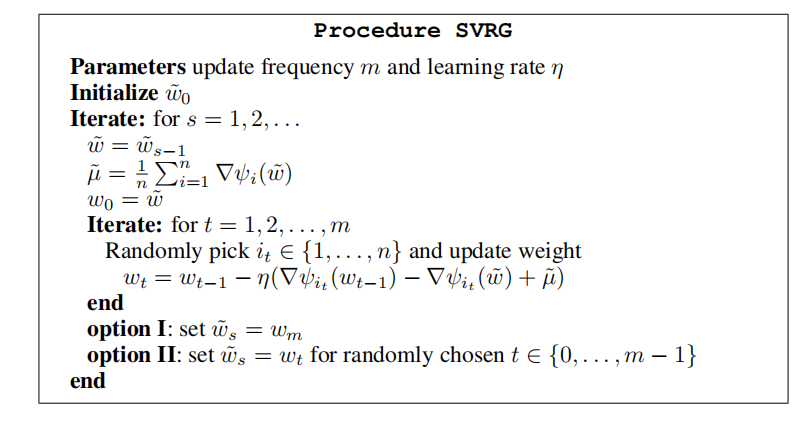

SVRG(Sophisticated Variation Reduced Desend) 在SGD的基础上加入统计计算中常用的方差缩减技术,算法流程如下,

可以得到SVRG算法在期望意义下线性收敛到最优值

\[\begin{align} E[P(\tilde w_s) - P(w_{\star})] &\le \alpha^s E[P(\tilde w_0) - P(w_{\star})]\\ \text{With } \alpha &= \frac{1}{\mu \eta(1-2L \eta)m} + \frac{2 L \eta}{1-2L \eta} <1, w_{\star} = \min_w P(w) \end{align}\]首先可以证明关于最优点的随机梯度的界,

\[\begin{align} \text{Let } g_i(w) &= \phi_i(w) - \phi_i(w_{\star}) - \nabla \phi_i(w_{\star})^T (w- w_{\star}) \\ 0 = g_i(w_{\star}) &\le \min_{\eta} [g_i(w- \eta \nabla g_i(w))] \\ & \le \min_{\eta} [g_i(w) - \eta \Vert \nabla g_i(w) \Vert_2^2+ \frac{1}{2} L \eta^2 \Vert \nabla g_i(w) \Vert_2^2] \\ &= g_i(w) - \frac{1}{2L} \Vert \nabla g_i(w) \Vert_2^2 \\ \end{align}\]代入则可以得到,

\[\begin{align} E\Vert \nabla \phi_i(w) - \nabla \phi_i(w_{\star}) \Vert_2^2 &\le 2L E(g_i(w)-g_i(w_{\star} )) \\ &=2L E[\phi_i(w) - \phi_i(w_{\star}) - \nabla \phi_i(w_{\star})^T (w- w_{\star})] \\ &=2L E[\phi_i(w) - \phi_i(w_{\star})] \\ &= 2L[P(w) - P(w_{\star})] \end{align}\]为了得到$w_{t},w_{t-1}$之间的关系,首先对其差值$v_t$的大小进行估计,

\[\begin{align} \text{Let } v_t &= \nabla\phi_i(w_{t-1}) - \nabla \phi_i(\tilde w) + \tilde \mu \\ E \Vert v_t \Vert_2^2 &= E \Vert \nabla\phi_i(w_{t-1}) - \nabla \phi_i(\tilde w) + \tilde \mu \Vert_2^2 \\ &= E \Vert \nabla\phi_i(w_{t-1}) - \nabla \phi(w_{\star})+ \nabla \phi(w_{\star})-\nabla \phi_i(\tilde w) + \tilde \mu \Vert_2^2 \\ & \le 2E \Vert \nabla\phi_i(w_{t-1}) - \nabla \phi(w_{\star})\Vert_2^2 +2E \Vert \nabla \phi(w_{\star})-\nabla \phi_i(\tilde w) + \tilde \mu \Vert_2^2 \\ &= 2E \Vert \nabla\phi_i(w_{t-1}) - \nabla \phi(w_{\star})\Vert_2^2 +2E \Vert \nabla \phi(w_{\star})-\nabla \phi_i(\tilde w) \Vert_2^2 - \Vert \tilde \mu \Vert_2^2 \\ &\le 2E \Vert \nabla\phi_i(w_{t-1}) - \nabla \phi(w_{\star})\Vert_2^2 +2E \Vert \nabla \phi_i(\tilde w) -\nabla \phi(w_{\star}) \Vert_2^2 \\ &\le 4L [P(w_{t-1})+ P(\tilde w) - 2P(w_{\star})] \end{align}\]进而我们计算每次梯度更新时候的界的变化,随机性仅仅加在$v_t$上面,

\[\begin{align} E \Vert w_t - w_{\star} \Vert_2^2 &= E \Vert w_{t-1} -\eta v_t -w_{\star} \Vert_2^2 \\ &= \Vert w_{t-1}- w_{\star} \Vert_2^2 +\eta^2 E \Vert v_t \Vert_2^2 - 2\eta (w_{t-1}-w_{\star})^T E[v_t] \\ &\le \Vert w_{t-1}- w_{\star} \Vert_2^2 +4\eta^2 L [P(w_{t-1})+ P(\tilde w) - 2P(w_{\star})] - 2\eta (w_{t-1} -w_{\star})^T \nabla P(w_{t-1} ) \\ &\le \Vert w_{t-1}- w_{\star} \Vert_2^2 +4\eta^2 L [P(w_{t-1})+ P(\tilde w) - 2P(w_{\star})] - 2\eta [P(w_{t-1})-P(w_{\star})] \\ \end{align}\]对所有的$m$次梯度下降利用上述不等式,并且根据OptionII随机选择$\tilde w$,

\[\begin{align} 0 \le E \Vert w_t - w_{\star} \Vert_2^2 &\le E \Vert w_0 - w_{\star} \Vert_2^2 + 4\eta^2 Lm E[P(w_{t-1})+ P(\tilde w_{s-1}) - 2P(w_{\star})] - 2\eta mE[P(\tilde w_{s})-P(w_{\star})] \\ &= E \Vert w_0 - w_{\star} \Vert_2^2 + 4\eta^2 L mE[P(\tilde w_{s})+ P(\tilde w_{s-1}) - 2P(w_{\star})] -2\eta m E[P(\tilde w_{s})-P(w_{\star})] \\ &= E \Vert \tilde w_{s-1} - w_{\star} \Vert_2^2 + 4\eta^2 L mE[P(\tilde w_{s})+ P(\tilde w_{s-1}) - 2P(w_{\star})] - 2\eta mE[P(\tilde w_{s})-P(w_{\star})] \\ & \le \frac{2}{\mu} E[P(\tilde w_{s-1}) -P(w_{\star})] + 4\eta^2 L m E[P(\tilde w_{s})+ P(\tilde w_{s-1}) - 2P(w_{\star})] - 2\eta m E[P(\tilde w_{s})-P(w_{\star})] \\ \end{align}\]据此可以得到不等式,最终可以证明其收敛界满足下式,

\[\begin{align} E[P(\tilde w_s) - P(w_{\star})] &\le \frac{4\eta^2Lm + \frac{2}{\mu}}{2\eta m-4 \eta^2 Lm } E[P(\tilde w_{s-1}) - P(w_{\star})] \\ &= (\frac{1}{\mu \eta(1-2L \eta)m} + \frac{2 L \eta}{1-2L \eta}) E[P(\tilde w_{s-1}) - P(w_{\star})] \\ \end{align}\]